Fun with Google Dorks

Google Dorks are a great way to uncover infomation semi-passively. Primarily, it involves special search queries that lead to information that shouldn't be public. In this blog post, I will showcase some useful queries and show the impact these queries have and provide some remediation steps.

The Queries

Both queries I'll show are very similar.

intitle:"index of /" intext:"config.py"

and

intitle:"index of /" intext:".env"

The core is the intitle:"index of /".

This returns results that contain "index of /" in the title.

Pages with results like this are open directories being listed by the underlying http server.

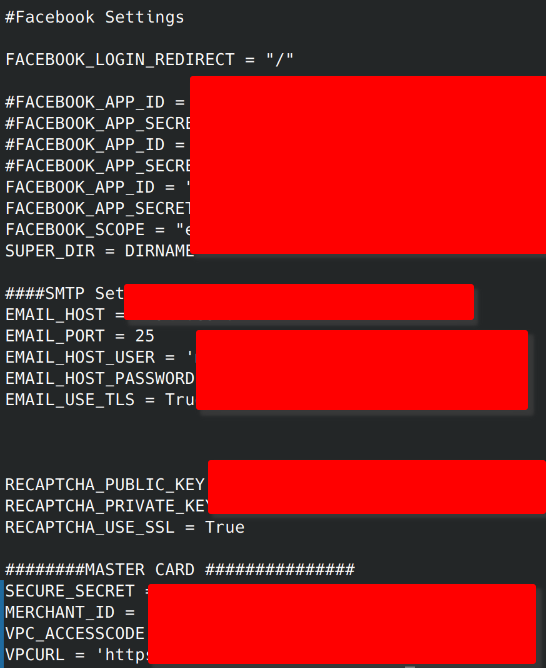

The settings.py is a configuration file for Django applications.

This file is likely to contain database credentials, the secret key for cookie signing, and in rare cases, smtp server credentials.

A cursory exploration of google results returned a terrifying number of results and high value credentials.

Gmail SMTP

Postgres/MySQL database

Sendgrid API tokens

Twilio API keys

Recaptcha API keys

Facebook App ID and secret keys

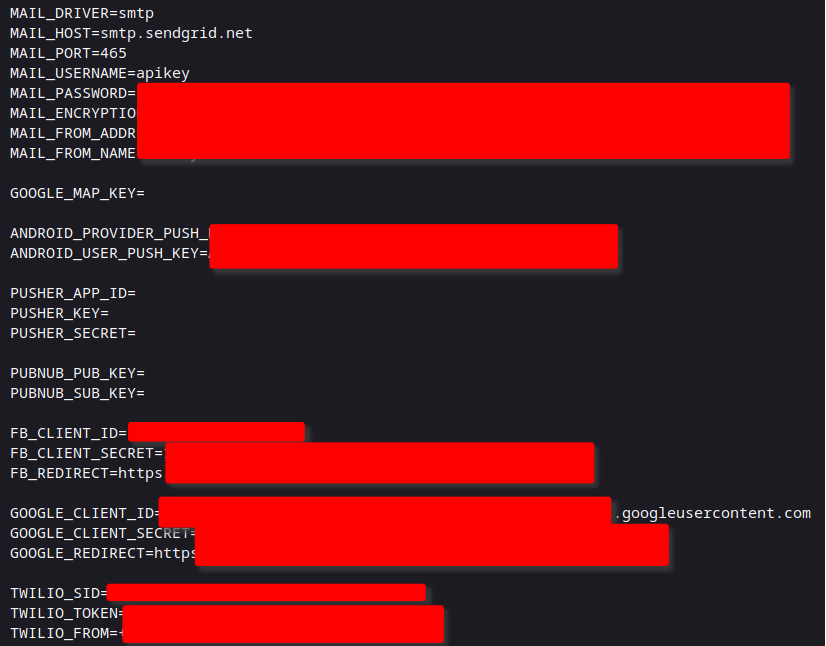

The second query returns results that contain .env files.

These are generally present where Docker containers are in use, but can be found in any configuration.

In these files, there was significant overlap with findings from the previous. However, there were some more interesting credentials uncovered.

Paypal Secrets

Laravel app keys

SMTP credentials

Facebook App ID

Google Client ID and Secret

Twilio API keys

JWT secret key

AWS Access Keys and bucket name

Why does this happen

Before getting to remediation, lets discuss how these files ended up in google search results. Multiple conditions must be present for this to occur.

Configuration files and .env files stored in the web root.

Directory listing has to be enabled.

robots.txt has allowed the files to be crawled by search engines

The Mitigations

Disallow access to configuration files at the HTTP server. For Apache, this requires a specific deny from all rule for the files. Similarly, for nginx based hosts, deny rules should be written for the files. Additionally, Django files should not be stored in the web root at all since they are served by a WSGI service rather than an HTTP server like nginx or Apache. Those steps alone would prevent access to the files. An entry to robots.txt would not be sufficient remediation. Only good faith actors respect those entries. It may be a drawback to list those files in robots.txt, since attackers will use those entries to focus their attention.

Conclusion

Utilizing the google dorks like intitle:"index of /" intext:"FOO" can reveal highly sensitive information when the access permissions are misconfigured.

Although this information could be revealed by directory brute forcing, google searches are passive.

Subjectively, the number of results found are likely far fewer than the number of directories that are open.